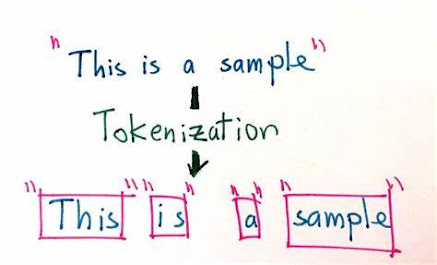

Tokenization is a fundamental step in Natural Language Processing (NLP) that involves breaking down text into smaller parts called tokens. These tokens are then used as input for a language model. Tokenization is a crucial step in NLP because it helps machines understand human language by breaking it down into bite-sized pieces, which are easier to analyze. In this blog post, we will explore the concept of tokenization in detail, including its types, use cases, and implementation.

What is Tokenization?

Tokenization is the process of converting a sequence of text into smaller parts, known as tokens. These tokens can be as small as characters or as long as words. The primary reason this process matters is that it helps machines understand human language by breaking it down into bite-sized pieces, which are easier to analyze. Tokenization is akin to dissecting a sentence to understand its anatomy. Just as doctors study individual cells to understand an organ, NLP practitioners use tokenization to dissect and understand the structure and meaning of text.

Types of Tokenization

Tokenization methods vary based on the granularity of the text breakdown and the specific requirements of the task at hand. These methods can range from dissecting text into individual words to breaking them down into characters or even smaller units. Here are some common types of tokenization:

1. Word Tokenization: This method breaks text down into individual words. It's the most common approach and is particularly effective for languages with clear word boundaries like English.

2. Character Tokenization: Here, the text is segmented into individual characters. This character-level breakdown is more granular and can be especially useful for certain languages or specific NLP tasks.

3. Subword Tokenization: This method breaks words down into smaller subwords. It's particularly useful for languages with complex morphology, such as German or Finnish.

Use Cases of Tokenization

Tokenization is a crucial step in many NLP tasks, including:

1. Text Classification: Tokenization is used to convert text into a format that can be used as input for a machine learning model. For example, in sentiment analysis, tokenization is used to break down text into individual words, which are then used to predict the sentiment of the text.

2. Named Entity Recognition: Tokenization is used to identify named entities in text. For example, in a news article, tokenization can be used to identify the names of people, places, and organizations mentioned in the article.

3. Machine Translation: Tokenization is used to break down text into smaller parts, which can then be translated more easily. For example, in English-to-French translation, tokenization is used to break down English text into individual words, which are then translated into French.

Implementation of Tokenization

Tokenization can be implemented using various libraries and frameworks. Here's an example of how to implement word tokenization using Python's Natural Language Toolkit (NLTK) library:

import nltk

from nltk.tokenize import word_tokenize

text = "Tokenization is the process of breaking down text into individual words or subwords, called tokens, which are then used as input for a language model."

tokens = word_tokenize(text)

print(tokens)Output:

['Tokenization', 'is', 'the', 'process', 'of', 'breaking', 'down', 'text', 'into', 'individual', 'words', 'or', 'subwords', ',', 'called', 'tokens', ',', 'which', 'are', 'then', 'used', 'as', 'input', 'for', 'a', 'language', 'model', '.']

In this example, we first import the `nltk` library and the `word_tokenize` function. We then define a string `text` and tokenize it using the `word_tokenize` function. Finally, we print the resulting tokens.

Summary

Tokenization is a crucial step in Natural Language Processing that involves breaking down text into smaller parts called tokens. Tokenization is used in many NLP tasks, including text classification, named entity recognition, and machine translation. Tokenization can be implemented using various libraries and frameworks, such as Python's Natural Language Toolkit (NLTK). By breaking down text into smaller parts, tokenization helps machines understand human language by making it easier to analyze. I hope this blog post has provided you with a comprehensive understanding of tokenization and its use cases.

Comments

Post a Comment